―Enables the construction of high-performance speech AI with a small amount of Japanese speech data―

Researchers) FUKAYAMA Satoru, Team Leader, OGATA Jun, Collaborating Visitors, Intelligent Media Processing Research Team, Artificial Intelligence Research Center

- Two types of Japanese speech foundation models were constructed from 60,000 hours of Japanese speech data, including rich emotional expressions.

- The ‘Izanami’, which allows easy fine-tuning, and ‘Kushinada’, more capable for emotion and speech recognition, are now publicly available.

- Contribute to the construction and dissemination of voice AI using small amounts of data

Construction of speech AI using the Japanese speech foundation models "Izanami" and "Kushinada

Improved the performance of speech AI, which had been limited by the small amount of training data, by using the feature representation of speech obtained from the Japanese speech foundation model.

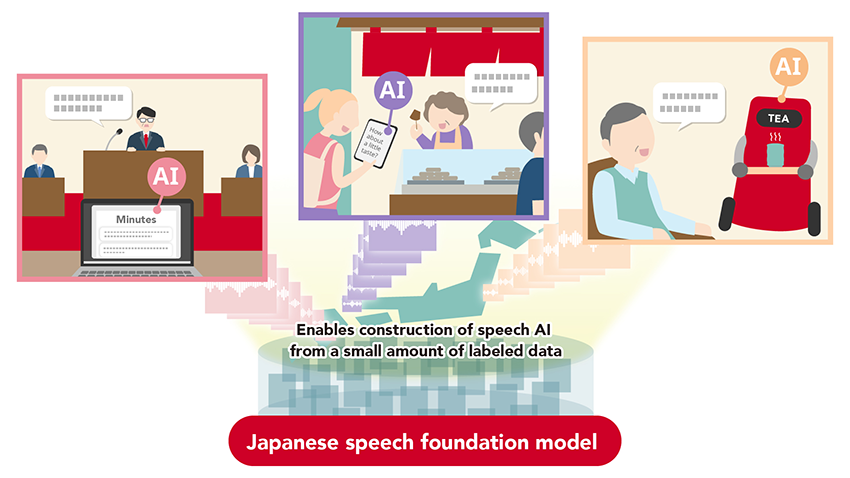

Speech AI, including speech recognition to transcribe speech and analysis of speaker emotions, is used in smart speakers and conference transcription. Speech data is complex data whose characteristics vary depending on the speaker, emotion, and acoustic environment. When an announcer reads in a quiet environment, supervised data is readily available and high-performance speech AI can be built through supervised learning. However, for conversational speech with rich emotional expression and speech of various generations, the amount of teacher data is not sufficient, and the performance of emotion recognition and speech recognition has been insufficient. Under such circumstances, the speech foundation model is attracting attention as a general-purpose AI model that can analyze speech data. Speech foundation models can be constructed through self-supervised learning without labeled data. By using the general-purpose feature representation of speech obtained through the speech foundation model, a high-performance speech AI can be built with a small amount of labeled speech data, and the speech AI built in this way is expected to be used in places such as nursing homes.

Researchers at AIST has released two Japanese speech foundation models, "Izanami" and "Kushinada," which can be used to build high-performance speech AI.

A speech infrastructure model is a general-purpose AI model for processing and analyzing speech data, which is increasingly being applied to speech recognition and speech emotion recognition. Building a speech foundation model requires at least a few thousand hours of speech data, which is based on the target language and the scene in which it is used. However, speech data such as conversational speech is scarce compared to single-talker speech, and speech AI performance has been insufficient for conversational speech that includes emotionally rich expressions.

We have built and released two Japanese speech foundation models, "Kushinada" and "Izanami," using the largest scale of Japanese speech data ever for creating a foundation model, 60,000 hours. The models are named after Japanese mythology in the hope that they will serve as creators and supporters of various types of speech AI in the future.

“Izanami" can be easily fine-tuned using user data, and "Kushinada" shows high performance in Japanese speech emotion recognition and speech recognition. These features enable the construction of high-performance speech AI even when only a small amount of labeled data is available, such as in the case of elderly people's speech or conversations containing emotionally rich expressions. In the future, we will work on improving speech recognition performance for Japanese dialects. It is expected to be used in a variety of situations, such as improving the problem of poor performance of speech AI due to regional and generational differences, and taking minutes in local assemblies.

The model can be downloaded from the AI model publishing platform Hugging Face (https://huggingface.co/imprt).

The Japanese speech foundation models "Izanami" and "Kushinada" are available for download from the AI model publishing platform Hugging Face (https://huggingface.co/imprt).