Norio Nakamura (Senior Research Scientist), Human Ubiquitous-Environment Interaction Group (Leader: Akio Utsugi), the Human Technology Research Institute (Director: Motoyuki Akamatsu) of the National Institute of Advanced Industrial Science and Technology (AIST; President: Tamotsu Nomakuchi), has developed "i3Space," a system that presents a tactile sensation (sense of touch) and a kinesthetic sensation (resistance) in the air when a user views 3D images; this system enables us to design shapes of objects and to simultaneously confirm how we feel when we touch the objects. This system has been developed by combining 3D television and the small-sized non-base-type haptic interface using sensory illusion, which can present highly sensitive and continuous tactile and kinesthetic sensations utilizing the human sensory characteristics to mistake some vibration patterns as actual tactile and kinesthetic sensations.

This system is an application of the technology that creates the feeling of touch and resistance in the air by presenting virtual tactile and kinesthetic sensations. The system recognizes the user's movement since the haptic interface is equipped with a marker for position detection; thus, the system can present a tactile sensation and a kinesthetic sensation in real time in accordance with the movement, in order to make the user feel like he or she is touching a 3D image. It is expected that the system will be used in surgery simulators and in 3D CAD (computer aided design), when an interface that integrates tactile and kinesthetic senses in addition to multiple senses such as visual and auditory senses is three-dimensionalized.

The details of this technology will be presented at CEDEC 2010, a conference for game developers, to be held at Pacifico Yokohama from Aug. 31 to Sept. 2, 2010.

|

|

|

|

Figure1 Devices developed in this study: highly sensitive non-base-type haptic interface using sensory illusion (left), touchable 3D television (middle), the non-base-type haptic interface using sensory illusion that is equipped with a marker for position measurement (right) |

In recent years, it has become possible to enjoy presence and reality of 3D images by using devices such as the 3D display. Further, intuitive operability has been improved, e.g., by developing the multi-touch panel used in smart phones. Three-dimensional images and touch operation are about to have a significant impact on the development of various products such as game devices. For example, it is expected that surgery simulators and design based on 3D CAD will be realized by developing the "touchable 3D television," which provides the user with the feeling of touch and resistance in the air when the user touches a 3D image.

The major conventional methods for presenting tactile and kinesthetic sensations are based on the use of a robot arm-type interface. In these methods, the device itself may restrict the user's movement; for example, the arms may collide when the device is operated with multiple fingers or both arms. In recent years, small portable devices that consist of eccentric motors have been developed. The manner in which they indicate contact with an image by the vibration of an eccentric motor might be strange to the user, because these devices cannot indicate the direction of contact and resistance force. Further, these tactile and kinesthetic sensations interfaces do not have sufficient functionality and performance for providing a feeling of touch and presence in the 3D space in which there is no physical object.

AIST aims to create an environment for information assistance and behavior support that is compatible with the characteristics of human cognitive behavior through the research and development of technologies for the measurement and evaluation of human characteristics. Thus far, AIST has developed "GyroCubeSensuous," a non-base-type haptic interface using sensory illusion, which can show position and size of the target object, and present perception of hardness and changes in the movement and shape, etc. (press release on April 11, 2005). We have also succeeded in reducing the size and weight of the interface (showcased at the Virtual Reality Expo, June 27, 2007).

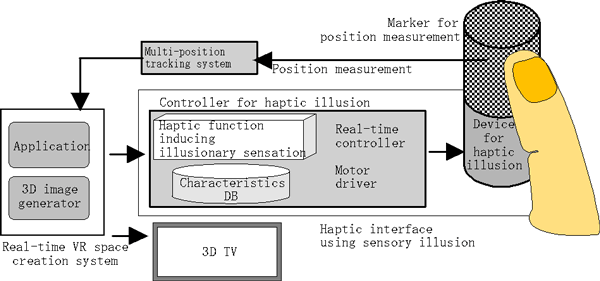

The developed "i3Space" is a system for creating a virtual reality (VR) space. This system provides the user with feeling of touch and resistance in the air when the user views 3D images (haptization), and it facilitates direct manipulation of virtual objects of 3D images with fingers (three-dimensionalization of multi-touch operations) (Fig. 2). The concept behind i3Space is to offer an activity space that helps to develop insights on the basis of illusions, intuitive comprehension of space, and natural operability. This system consists of a real-time VR space creation system, which simulates the tactile and kinesthetic sensations for 3D images, the non-base-type haptic interface using sensory illusion (the device which presents the tactile and kinesthetic sensations, and the controller for the device), and the multi-position tracking system, which measures the movement of fingers.

The real-time VR space creation system simulates the environment and movement of physical models in the VR space in a computer, and generates reaction forces and 3D images according to the user's actual movement. It calculates the force exerted on the physical models based on the user's movement and the positions of fingers, simulates the deformation and movement of the physical models, and then, generates forces exerted on the user and 3D images. It conducts a series of processes in order to create responses to the user's movement in real time.

The multi-position tracking system is a system for measuring the position of a marker attached to a fingertip by surrounding the user with several cameras. By using multiple cameras, it is possible to measure the position without blind spots.

The "GyroCubeSensuous" device, which was developed by AIST, has been employed as the haptic interface.

|

|

Figure 2 System configuration of i3Space |

1. Haptization of 3D images (Fig. 3)

In the case of conventional touch panels, it is easy to select or confirm an operation point, since the target of contact is on the flat panel. On the contrary, in the case of touch operation of 3D images, if there is no feedback as tactile and kinesthetic sensations in the air, it is necessary to gaze at the contact point on the image in order to confirm the operation, and it is difficult to obtain natural operability since there is no reaction force from the 3D image. Therefore, the developed system measures the position of the fingertip, calculates the point of contact of the finger and the 3D image, and determines the interaction between the objects, and present the force feedback using the haptic interface; thus, it makes the user feel like he or she is touching the 3D image.

When the movement of the finger and the direction of the force do not match, and 3D images do not include any physical object, it is difficult to reproduce drag or friction forces and to exert the forces on a finger during the pressing the finger on virtual object. It is even more difficult to continuously exert a force on a stationary finger in the air. This system makes it possible to present tactile and kinesthetic sensations in the case of 3D images that do not include any physical object using the human illusionary sense to vibrations.

Additionally, in the stereoscopic view, excess emphasis on making objects project out of the display screen in order to make them appear within reach of the user could cause eyestrain. This is because of the poor separation of the images that can be seen by the right eye or the left eye and because of the mismatch between the position of the 3D image that the user feels and the actual position of the image on the screen. Therefore, this system adopted indirect haptization, namely, it offers resistance force to a fingertip that is held on the 3D image without aligning the positions of the 3D image and the finger.

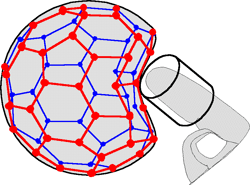

2. 3D multi-touch operation (Fig. 4)

For performing multi-touch operations using a touch panel, operations such as moving, expanding, shrinking, or deforming are performed intuitively by touching the panel with multiple fingers and by moving contact points. In contrast, the present system can detect the contacts between the fingers and a 3D image, movements performed to clip the 3D image, and movements made to expand and shrink the image after clipping based on the position of the fingers; the fingers are not hidden by the palm from some cameras since the movement of the fingers is simultaneously measured from six directions by the multi-position tracking system. Based on the detection, the system calculates the forces between the 3D image and the fingers, controls the non-base-type haptic interface using sensory illusion, and creates the feeling of touch and resistance. This technology facilitates three-dimensional operations for moving, deforming, and rotating 3D images by the movements of multiple fingertips.

Figure 3 Feeling touch and resistance for a 3D image

|

|

Figure 4 Demonstration of 3D multi-touch operation (deformation)

|

Application: Design support with 3D CAD based on force feedback (Fig. 5)

In conventional CAD, shape information is input in the form of numerical values using a mouse or a keyboard. Recently, domestic electrical appliances with fascinating design as their most important feature are popular. As these appliances are designed based on conceptual illustrations, those who draft designs should digitize shape information for CAD. In this process, the intention of the designer is not accurately reflected in numerical data in many cases. By applying the developed system, it will become possible to design 3D shapes by adjusting the degree of deformation while confirming the deformation of a 3D image with the reaction force through a kinesthetic sensation, and the result of the design can be obtained in the form of numerical data. Further, movement of user's viewpoint to virtual object in 3D space can be assisted by monitoring the shifts in the body weight and the posture using a balance sensor placed beneath the feet. Similar to how a potter uses a potter's wheel to form a pot, a virtual vase in 3D image can be formed three-dimensionally as the 3D image is rotated by using the balance sensor. The feeling of touch can be used to confirm this formation and the system can be used as an aid in creative activities while stimulating sensitivity.

|

|

|

Figure 5 3D design support with kinesthetic sense feedback |

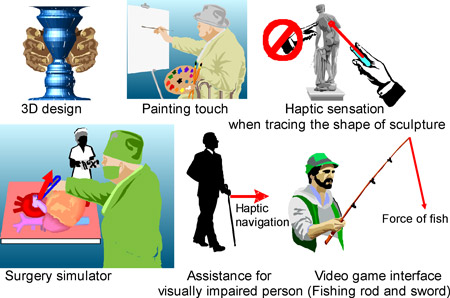

Since this technology has been independently developed by AIST, we strive to start a venture business. In the future, we intend to reduce the size of the system, enhance its functionality, and apply it to smart phones and other devices. We also plan to carry out development and field tests for various possible applications through collaborations with manufacturers of domestic electrical appliances and information technology devices (Fig. 6).

|

|

Figure 6 Possible Applications |